February is the month of love, and for ÂTTN.LIVE, that means leaning into hearts, romance, and uncomfortable questions about how humans connect in a rapidly digitizing world. Flowers and chocolates still exist, sure—but alongside them are sex dolls, synthetic partners, and AI chatbots that whisper affection back to lonely users.

From people marrying cardboard cutouts and dolls, to developing romantic attachments to robots, Alexa-like assistants, and now advanced AI companions, love is evolving in ways that would have felt impossible just a decade ago. The question is no longer if people are forming relationships with non-humans—but why, how often, and at what cost.

Are we witnessing human adaptability… or are we already living inside a Black Mirror episode?

This deep dive explores why people are marrying dolls, what the statistics say, the psychological effects behind synthetic love, and how platforms like Character.AI are reshaping intimacy—especially for the most vulnerable among us.

Human relationships have always been shaped by technology. Letters turned into phone calls. Phone calls turned into texts. Texts turned into DMs. Now, DMs are being answered by AI that listens endlessly, never judges, and never leaves.

According to reporting from the Daily Star, there are people across the globe who have formally married objects—sex dolls, cardboard figures, and inanimate companions. While it’s easy to laugh or recoil, sociologists and psychologists warn that these relationships are symptoms of much deeper social shifts rather than isolated eccentricities.

This trend isn’t about novelty. It’s about loneliness.

Based strictly on sociological and psychological research discussed in Men’s Health, The Society Pages, Medium analyses, and academic literature, several key factors emerge.

Men’s Health links the rise of sex doll companionship directly to modern loneliness. Many individuals—particularly men—report feeling socially isolated, romantically rejected, or emotionally invisible. Synthetic partners offer companionship without the risk of rejection.

Unlike human relationships, dolls don’t argue, cheat, leave, or disappoint. For some, that predictability feels safer than emotional vulnerability.

The Society Pages explains that synthetic partners provide complete control. The doll looks how the owner wants, behaves how they want, and exists solely for their emotional or sexual needs.

In a world where real relationships require compromise, empathy, and emotional labor, dolls offer intimacy without accountability.

Medium’s exploration into doll companionship highlights how individuals with past trauma—abusive relationships, bullying, or chronic social anxiety—may retreat into relationships where rejection is impossible.

This isn’t always about desire. Sometimes it’s about protection.

Hard numbers on doll marriages are limited, but the available data paints a clear directional trend.

While only a small percentage legally “marry” dolls, a much larger group forms emotionally exclusive bonds with them.

This is where things get complicated.

Doll companionship can temporarily reduce feelings of loneliness. However, psychologists warn that it may also reduce motivation to pursue real-world connections, reinforcing avoidance behaviors rather than healing them.

Synthetic partners never challenge harmful beliefs or behaviors. Over time, this can distort expectations of real relationships—where conflict and compromise are unavoidable.

According to psychological analysis referenced in sociological studies, one-sided attachment can intensify emotional dependency. When affection is simulated but not reciprocal, it may deepen loneliness rather than resolve it.

What happens when dolls don’t just sit silently—but talk back?

Platforms like Character.AI allow users to chat with AI-generated personalities. These bots can flirt, comfort, roleplay romance, and simulate emotional intimacy with surprising realism.

Unlike dolls, AI companions respond dynamically. They remember preferences, adapt their tone, and mirror emotional language. For lonely users, this can feel like a genuine connection.

Time Magazine and Psychology Today document users describing AI relationships as “emotionally fulfilling,” with some admitting they prefer AI partners over human ones.

Character AI bots are conversational agents trained to emulate fictional characters, historical figures, or entirely original personalities. Users can design romantic partners, best friends, mentors, or confidants who are available 24/7.

According to Internet Matters, these bots:

They are not conscious—but they feel attentive.

In some cases, yes.

Research from the Institute for Family Studies reveals that 1 in 4 young adults believe AI partners could replace real-life romance. This belief isn’t hypothetical—it’s already shaping behavior.

AI companions:

For individuals struggling with self-worth or social anxiety, that can be intoxicating.

But psychologists warn that replacing reciprocal relationships with simulated ones may stunt emotional development, especially among younger users.

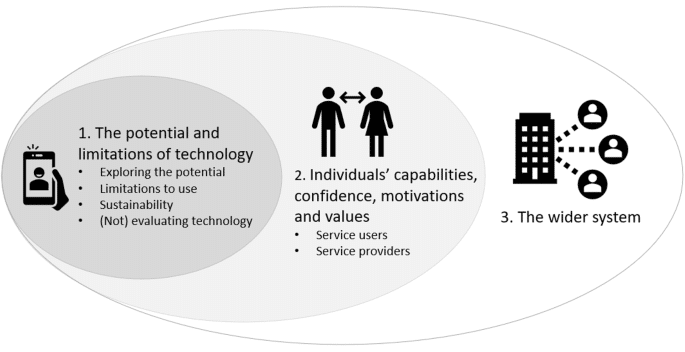

As AI companions become more emotionally responsive and immersive, concerns are mounting around who is most at risk—and why. Reporting and research increasingly point to two particularly vulnerable groups: children and teenagers navigating emotional development, and older adults experiencing chronic loneliness. In both cases, the issue isn’t casual use—it’s emotional dependence.

Health experts and child-safety organizations have repeatedly warned that minors are not developmentally equipped to distinguish between simulated emotional validation and genuine human empathy. According to guidance from HealthyChildren.org and reporting by BBC News, AI chatbots can blur emotional boundaries for young users, especially when those bots are designed to reassure, flatter, and “care” without limits.

These concerns escalated dramatically following high-profile legal cases involving Character.AI. Investigations reported by NBC News and CNN detail lawsuits filed after the death of a Florida teenager whose parents allege that prolonged interaction with Character AI chatbots contributed to severe emotional distress and, ultimately, suicide.

According to court filings summarized in the reporting, the teen reportedly formed an intense emotional bond with AI characters that mirrored romantic and supportive relationships. Family members argue that the chatbot interactions reinforced isolation, encouraged emotional withdrawal from real-life relationships, and failed to provide safeguards when the teen expressed distress. While the lawsuits do not claim that AI alone caused the death, they argue that the platform lacked adequate protections for minors engaging in emotionally charged conversations.

The case has intensified calls for stricter age controls, clearer disclaimers, and stronger moderation of emotionally sensitive content. More broadly, it has forced a public reckoning with a difficult reality: when AI is always available, endlessly affirming, and emotionally responsive, vulnerable teens may begin to treat it as a primary support system—without realizing its limits.

For older adults, AI companionship presents a more ambiguous picture. Research and reporting suggest that conversational AI can ease feelings of isolation, particularly for individuals living alone or with limited mobility. However, experts warn that when AI becomes a replacement rather than a supplement to human interaction, it may deepen social withdrawal.

Older users facing bereavement, declining health, or shrinking social circles may gravitate toward AI companions because they offer conversation without effort or rejection. Over time, reliance on AI for daily emotional engagement can reduce motivation to seek real-world social contact, reinforcing the very isolation it initially helped soothe.

Across age groups, the underlying risk is emotional dependency. Scientific literature reviewed via ScienceDirect emphasizes that prolonged reliance on AI for emotional regulation and companionship can increase psychological vulnerability. Because AI systems are designed to be agreeable and responsive, they may unintentionally reinforce maladaptive coping strategies—such as avoidance of conflict, social withdrawal, or unrealistic expectations of constant validation.

Unlike human relationships, AI companions do not challenge users in meaningful ways, set boundaries, or require reciprocity. Over time, this imbalance can distort how individuals perceive intimacy, emotional support, and resilience.

In short, the danger is not that AI chatbots exist—but that they are increasingly positioned, intentionally or not, as substitutes for human connection. The tragic death linked to Character AI has sharpened this concern, highlighting the urgent need for stronger safeguards, clearer ethical boundaries, and a more honest public conversation about what AI companionship can—and cannot—replace.

This is the core question.

According to Upwork and education-focused resources, Character AI has legitimate uses:

The danger arises when AI is used as a replacement rather than a tool.

Character AI itself has acknowledged safety concerns, rolling out community guidelines, moderation updates, and parental controls. But enforcement remains inconsistent.

Based on safety resources and platform guidance, yes—but with boundaries.

AI can simulate love—but it cannot reciprocate it meaningfully.

So, is Character AI bad?

Not inherently.

But when loneliness, trauma, and social disconnection collide with technology designed to simulate affection, the results can be dangerous—especially for kids, teens, and emotionally isolated adults.

The real purpose of Character AI isn’t to replace human love. It’s to assist, educate, and entertain. The moment it becomes a substitute for genuine human connection, we cross into ethically gray territory.

Love has always evolved. But hearts—real ones—still need other hearts to survive.

And no matter how convincing the AI becomes, love was never meant to be one-sided.

As we move deeper into this month of hearts and romance, perhaps the most radical act is choosing connection over convenience—and remembering that real love, unlike artificial affection, is messy, mutual, and profoundly human.

Daily Star. Meet the people who married random objects including dolls and cardboard cutouts.

https://www.dailystar.co.uk/news/weird-news/meet-people-who-married-random-36398456

Men’s Health. Men With Sex Dolls Are Opening Up About Loneliness.

https://www.menshealth.com/sex-women/a64146926/men-with-sex-dolls-loneliness-epidemic/

The Society Pages (Council on Contemporary Families). Why Some People Choose Synthetic Partners Over Human Ones.

https://thesocietypages.org/ccf/2022/03/01/why-some-people-choose-synthetic-partners-over-human-ones/

Medium – L. Ireland Right. Technology and Solitude: Exploring the Impact of Doll Companions in the Digital Age.

https://lirelandright.medium.com/technology-and-solitude-exploring-the-impact-of-doll-companions-in-the-digital-age-e6226368752e

Taylor & Francis Online. Synthetic Companionship and Human Relationships.

https://www.tandfonline.com/doi/abs/10.1080/01639625.2022.2105669

Internet Matters. What is Character AI?

https://www.internetmatters.org/advice/apps-and-platforms/entertainment/character-ai/

TIME Magazine. People Are Falling in Love With AI Chatbots.

https://time.com/6257790/ai-chatbots-love/

Psychology Today. How People Are Finding Love in Artificial Minds.

https://www.psychologytoday.com/us/blog/best-practices-in-health/202510/how-people-are-finding-love-in-artificial-minds

AI for Education. The Rise of AI Companions and Synthetic Relationships.

https://www.aiforeducation.io/blog/the-rise-of-ai-companions-synthetic-relationships

Institute for Family Studies. Artificial Intelligence and Relationships: 1 in 4 Young Adults Believe AI Partners Could Replace Real-Life Romance.

https://ifstudies.org/blog/artificial-intelligence-and-relationships-1-in-4-young-adults-believe-ai-partners-could-replace-real-life-romance

EM Lyon Knowledge. Would You Replace Your Boyfriend or Girlfriend With an AI Chatbot?

https://knowledge.em-lyon.com/en/would-you-replace-your-boyfriend-or-girlfriend-with-an-ai-chatbot/

HealthyChildren.org (American Academy of Pediatrics). Are AI Chatbots Safe for Kids?

https://www.healthychildren.org/English/family-life/Media/Pages/are-ai-chatbots-safe-for-kids.aspx

ScienceDirect. Psychological and Behavioral Impacts of AI Companions.

https://pdf.sciencedirectassets.com/776616/1-s2.0-S2451958825001307/main.pdf

NBC News. Character.AI Lawsuit After Florida Teen’s Death.

https://www.nbcnews.com/tech/characterai-lawsuit-florida-teen-death-rcna176791

BBC News. Character AI and Child Safety Concerns.

https://www.bbc.com/news/articles/ce3xgwyywe4o

The Guardian. Character AI Suicide Concerns and Child Safety Debate.

https://www.theguardian.com/technology/2025/oct/29/character-ai-suicide-children-ban

CNN. Teen Suicide Lawsuit Raises Questions About AI Chatbots.

https://www.cnn.com/2024/10/30/tech/teen-suicide-character-ai-lawsuit

Character.AI Official Blog. Community Safety Updates.

https://blog.character.ai/community-safety-updates/

Upwork Resources. How Character AI Can Be Used Productively.

https://www.upwork.com/resources/character-ai

Hello Teacher Lady. How to Use AI Character Chatbots in the Classroom.

https://www.helloteacherlady.com/blog/2024/3/how-to-use-ai-character-chatbots-in-the-classroom

Internet Matters. Character AI Safety and Parental Guidance.

https://www.internetmatters.org/advice/apps-and-platforms/entertainment/character-ai/