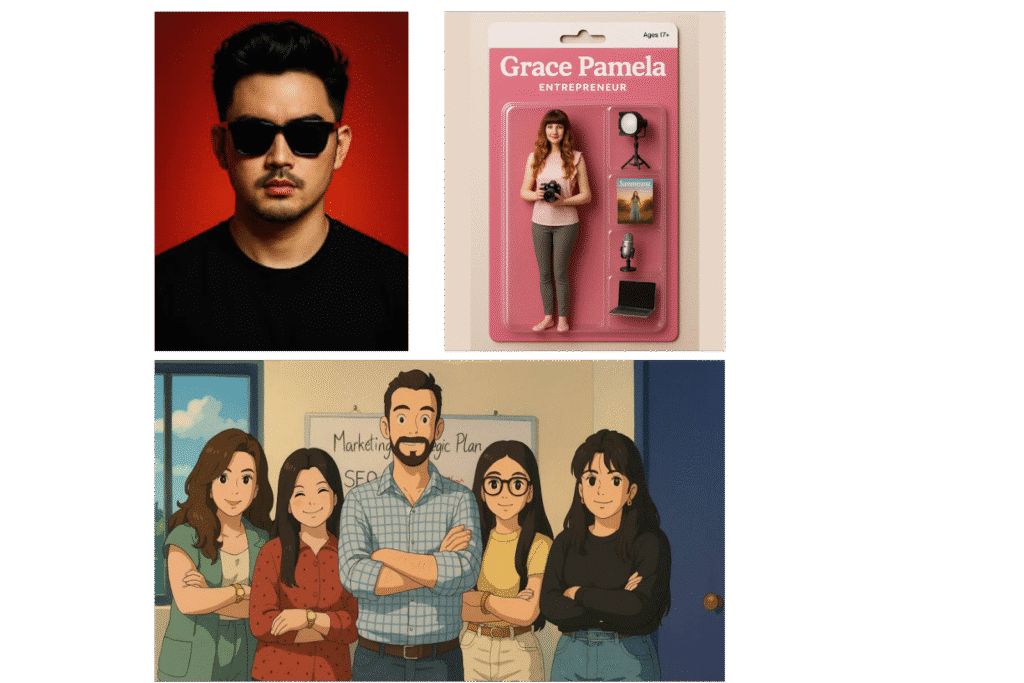

Over the past year, the Philippines has become a hotbed for viral AI-generated photo trends. You’ve probably seen them all over your feed: stylized “Red Studio” portraits, anime versions of your friends, doll-like mini figurines, or even Ghibli-style image transformations. These viral challenges ask users to submit their own photos to ChatGPT-compatible tools, which then return a polished, often highly personalized image.

It’s easy to see the appeal: the results are beautiful, the format is shareable, and the trends move fast. But beneath the filters and digital glitz lies a serious and often ignored issue: you’re handing over your biometric identity to an AI model that might store, retain, or even repurpose it.

Let’s explore what happens when you submit your face to ChatGPT or related AI tools, what OpenAI’s privacy policy really says, who is protected by zero data retention, how Philippine law applies, and what you give up—legally and ethically—when you chase these trends.

According to OpenAI’s privacy policy, when you use tools like ChatGPT or its image generator features, your prompts—including image uploads—can be stored and reviewed to improve the performance and safety of their models (Dmosyan, 2025). This means your selfies, red studio portraits, or mini figurine photos aren’t simply being processed and deleted in real-time. In most cases, they’re temporarily saved and may be retained for up to 30 days—or longer under certain legal or security obligations (Bright Inventions, 2023).

While OpenAI claims to take privacy seriously, multiple layers of data retention exist:

The company does allow users to delete their chat history, but as court rulings and legal holds have shown, deletion doesn’t necessarily mean erasure from all internal systems (Harvard Law Review, 2024).

Even worse, OpenAI’s privacy disclosures are dense and difficult for everyday users to parse. When you upload an image, you’re rarely informed in plain language how long that image will live on servers or who might access it internally for audits, safety, or training purposes (Ismailkovvuru, 2025).

This becomes especially problematic with visual data. Unlike text prompts, images contain embedded metadata, such as geolocation tags, timestamps, and uniquely identifying features like facial geometry. Your photo is your biometric signature—and once uploaded, you lose control over it.

To mitigate data privacy concerns, OpenAI introduced a feature called Zero Data Retention (ZDR), particularly for business and enterprise users (JeffKessie50, 2024). Under this policy, user inputs are not stored or used to improve models. ZDR endpoints process data in real-time and discard it immediately after response generation.

However, here’s the kicker: ZDR is not available to regular users. If you’re using ChatGPT’s free or Plus tiers—like most Filipinos participating in these viral trends—your data can and will be retained under the default settings.

If you upload a selfie through a non-ZDR endpoint for a figurine-style render or red backdrop portrait, your input may be:

In the context of generative models, this can lead to your likeness being regenerated in outputs for other users—a privacy nightmare that many aren’t aware of until it’s too late.

The Philippines has its own robust data privacy laws under Republic Act No. 10173, otherwise known as the Data Privacy Act of 2012 (DPA). This law protects Filipino citizens from unlawful processing of personal data—including visual or biometric information—and grants them rights such as access, correction, and erasure of their data.

In December 2024, the National Privacy Commission (NPC) issued Advisory No. 2024-04, directly addressing AI systems and how they process personal data (Gorriceta Africa Cauton & Saavedra, 2024). The advisory laid down key guidelines:

Even when a Filipino user voluntarily submits their photo to ChatGPT, the AI system (or its developers) must comply with DPA obligations if it targets or affects Filipino citizens. However, enforcement becomes difficult because OpenAI is headquartered in the U.S., creating jurisdictional challenges in applying local privacy rights across borders.

This leaves Filipino users in a vulnerable position: you have rights, but limited enforcement tools. And once a viral trend takes off, millions of photos may already be sitting in a server outside the country—potentially outside your legal reach.

In what could be a landmark case for data privacy and AI, The New York Times sued OpenAI in 2023 for unauthorized use of its copyrighted materials during model training. As part of the lawsuit, a U.S. court ordered OpenAI to preserve all user data, even if users deleted their chats (Harvard Law Review, 2024).

This is where things get real.

OpenAI’s usual privacy policy allows users to delete their conversations. But under legal orders, those deletions are paused—user data is retained indefinitely in segregated systems for legal compliance (Huntress, 2024).

If you uploaded your image to ChatGPT in the past year, thinking it was deleted when you clicked “clear history,” it might still be stored on OpenAI’s servers due to this court order.

OpenAI has publicly stated that this order does not apply to enterprise or ZDR users (OpenAI, 2024). But for regular users—especially those participating in these image-based trends—there is no guaranteed deletion.

This situation brings up critical ethical and legal concerns:

Returning to the viral trends—Red Studio portraits, doll-style figures, and stylized selfies—here’s what you’re really giving up:

Your face isn’t just data. It’s a unique identifier that can be matched, analyzed, and linked to online profiles. You might not realize that the selfie you submitted could be used to track you, profile you, or be re-used in unknown future outputs.

If your input is not covered by ZDR, it may be used to further train OpenAI’s models. That means pieces of your likeness could resurface elsewhere. It’s not paranoia—it’s already happening with image generators trained on publicly sourced datasets (IFA Magazine, 2024).

Your stylized photo could be reverse engineered or scraped by malicious actors. It only takes a bit of metadata to expose your location, identity, or social connections. Even harmless “fun” challenges can become attack vectors.

The “delete” button feels reassuring, but as seen in the NYT case, deletion doesn’t always mean erasure. Once your face is uploaded, you lose practical control over where and how it’s used.

Your rights under Philippine law are strong in theory—but OpenAI is not a local company. Legal recourse is slow, limited, and difficult to enforce unless coordinated with international data protection agencies.

Viral trends come and go. Your data doesn’t.

Submitting your face to ChatGPT—especially through fun challenges—opens you up to privacy loss, identity risk, and permanent exposure. OpenAI’s privacy promises don’t fully apply to standard users. Zero Data Retention is not default. And your “right to delete” might be suspended by court orders you’ll never see.

In the end, you have to ask: Is a stylized cartoon version of yourself worth giving up your digital autonomy?

We don’t say this to scare you—but to empower you to make informed choices. AI isn’t evil, but it’s not neutral either. Tools like ChatGPT are shaped by policies, incentives, and legal realities that often prioritize innovation over individual safety.

So next time a friend invites you to join a new AI trend, pause. Think. Then maybe pass.

Bright Inventions. (2023, November 13). OpenAI API privacy policies explained.

Dmosyan, D. (2025, February 6). I have read the OpenAI Privacy Policy: Here is what you need to know. Medium.

Futurism. (2024, April 21). Hackers trick ChatGPT into leaking users’ private data.

Gorriceta Africa Cauton & Saavedra. (2024, December 20). Philippines’ NPC releases guidelines on AI systems.

Harvard Law Review. (2024, April 3). NYT v. OpenAI: The Times’s about-face.

Huntress. (2024, June 12). OpenAI, court orders, and the state of cybersecurity privacy.

IFA Magazine. (2024, December 17). ChatGPT AI Barbie trend: Expert warns it could come at a cost.

Ismailkovvuru, I. (2025, March 9). ChatGPT privacy leak 2025: Deep dive, real-world impact, and industry lessons. Medium.

JeffKessie50. (2024, December 1). OpenAI’s zero data retention policy.

OpenAI. (2024, April 6). Response to NYT data demands.